Mercury Software – The risks of ChatGPT in Staffing and Recruitment (a warning for our profession)

By Daniel Fox, Marketing Manager at Mercury

In an era where technology moves at phenomenal paces and increasingly intersects with every layer of business, recruiters and staffing agencies have found a new ally in ChatGPT. This AI-driven tool offers remarkable efficiencies in content generation and data processing. However, there’s a darker side to this technology that your teams need to be aware of, especially regarding the handling of sensitive information.

Data Security

The primary concern with using ChatGPT in recruitment processes is data security. ChatGPT, like many AI platforms, requires data input to generate responses. When recruiters input sensitive data, such as personal details of candidates or confidential company information, there’s a risk of data breaches. This risk is exacerbated if the platform or the user’s network security is compromised.

Recruitment firms should establish strict guidelines on what data can be shared with AI tools.

Unintended Data Sharing

AI models can retain and learn from the data fed into them. Sensitive information, once entered, may become part of a larger dataset, potentially accessible by unauthorised entities. Many regions have strict data protection laws (like GDPR in Europe). Unknowingly, recruiters might breach these regulations by sharing personal data with AI tools.

Staff should be regularly trained on data protection, AI biases, and the ethical use of AI in recruitment.

Reliability and Accuracy Issues

Another concern is the accuracy and reliability of the responses generated by ChatGPT. While advanced, it’s not infallible and can produce errors or biased information based on the data it’s trained on.

Misinterpretation of Data

ChatGPT might misinterpret complex recruitment scenarios, leading to inappropriate or ineffective suggestions.

While ChatGPT and similar AI tools offer significant advantages in efficiency and data processing, the recruitment industry must tread cautiously. Balancing the use of AI with the need for data security, human touch, and ethical practices is crucial. Recruitment agencies should implement robust data protection measures, ensure compliance with legal standards, and maintain the human-centric approach that is at the heart of successful recruitment.

But there’s a solution…

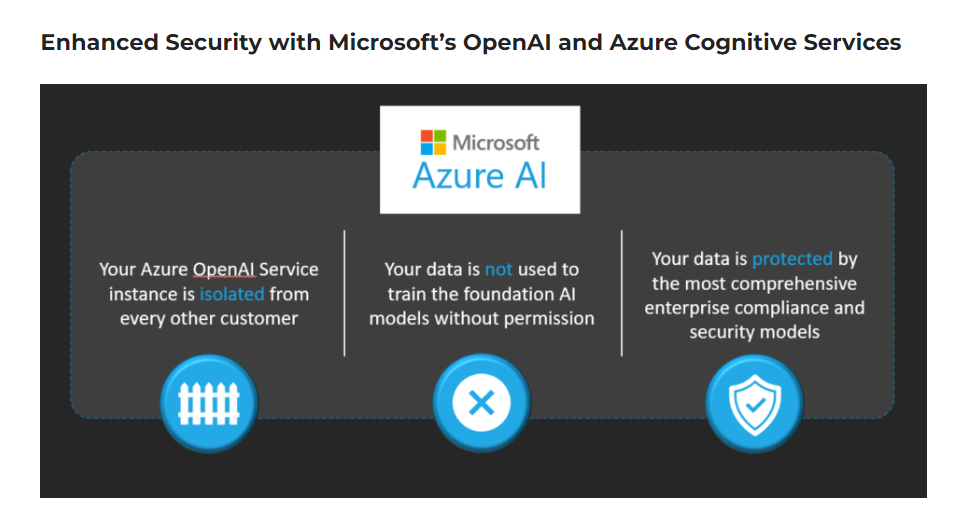

A significant advancement in mitigating data security risks with AI-driven tools is seen with Microsoft’s use of OpenAI and Azure Cognitive Services. This is particularly beneficial for recruiters and staffing agencies because it operates within the organization’s own data environment. Unlike public services where data is uploaded to external servers, Azure’s framework allows for the processing and analysis of sensitive data entirely within the user’s controlled and secured infrastructure. This approach substantially reduces the risk of data breaches and unauthorized access, as the data remains within the secure perimeter of the organization’s Azure environment.

Furthermore, Microsoft’s robust security protocols in Azure, including compliance with international data protection standards, provide an added layer of protection. This ensures that recruitment agencies can leverage the power of AI, like that offered by OpenAI’s ChatGPT, with greater confidence in maintaining the confidentiality and integrity of sensitive candidate and company information.

This article was originally published here.